Connect Data Lake with Vince

Learn how to connect Vince to your M3 Data Lake, and when a data lake query might be an easier extract option than an M3 API. Also get some SQL examples to get you on your way!

Why call Data Lake instead of just calling M3 APIs?

Although Data Lake performance can lag behind M3 API performance, there are some business cases where it is easier to export data from the Data Lake instead of calling M3 APIs. These include:

-

Exports looking for empty values

M3 API can struggle when looking for data that is null or empty. Data Lake sql is better at discovering this sort of data condition.

Select ITNO, FACI, CSNO from MITFAC where CSNO = '' order by ITNO -

Exports with an “OR” scenario

For example, looking for item master data ether the net weight OR the gross weight is missing:

Select CONO, ITNO, NEWE, GRWE, UNMS, FUDS from MITMAS where NEWE = 0 OR GRWE = 0

Or looking for order lines in multiple specific and non-sequential statuses:

Select ORNO, PONR, ORST, ITNO from OOLINE where ORST IN ('33','44') -

Exports looking for missing information

For example, items that are present in a master pricelist, but missing completely from a dependent / subsidiary pricelist (i.e. customer-based, or alternate currency)

SELECT table1.ITNO EURITNO FROM OPRBAS table1

LEFT JOIN OPRBAS table2 ON table2.prrf = table1.prrf and table2.cuno = table1.cuno and table1.itno = table2.itno

and table2.fvdt = table1.fvdt where table2.cucd = 'NOK' and table1.cucd = 'EUR' and table1.PRRF = 'DEMO02' and table1.CUNO = 'SGDEMO01' and table2.itno is null

Guide: Using the Data Lake step in Vince Workflows

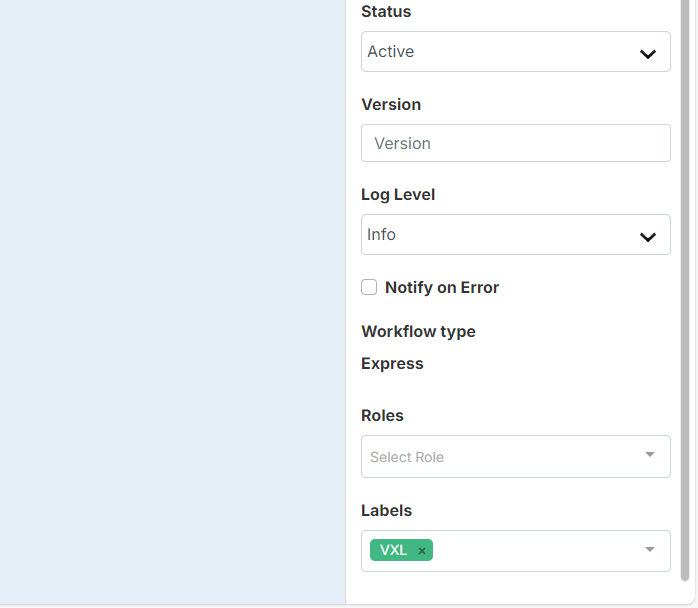

The VXL label

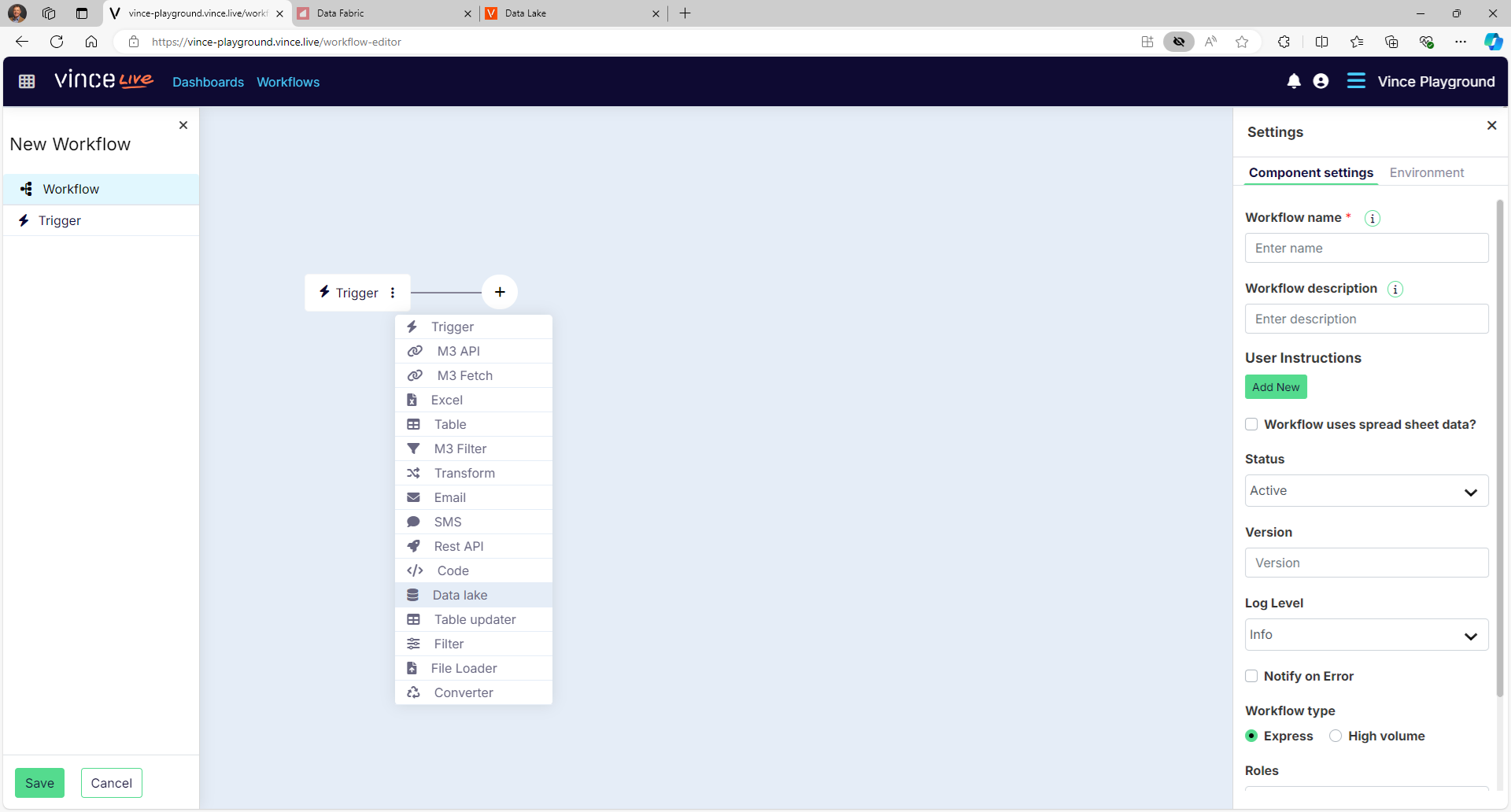

The Data Lake Step

Select the Data lake step in the workflow designer, as shown.

Activate component settings

There are 4 component settings needed to activate the data lake step

-

Endpoint – the data lake endpoint of the M3 environment to which this workflow will select data.

-

Body – the data lake sql statement

-

connectionId – A reference to the authentication credentials needed to call the endpoint

-

saveResultToS3 – instructions to save or not save the query result to an S3 “bucket” in AWS

We will detail these steps below.

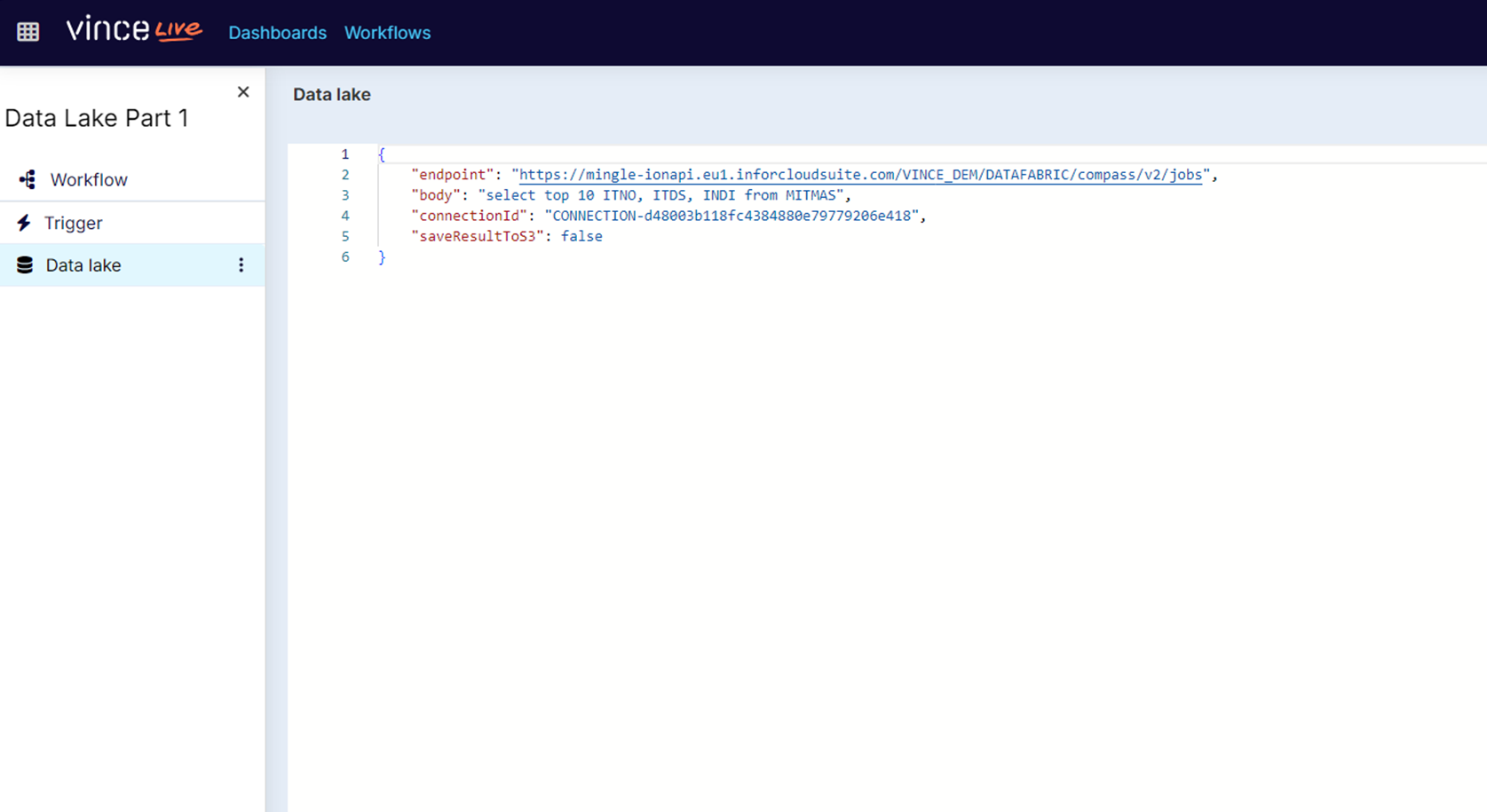

Endpoint

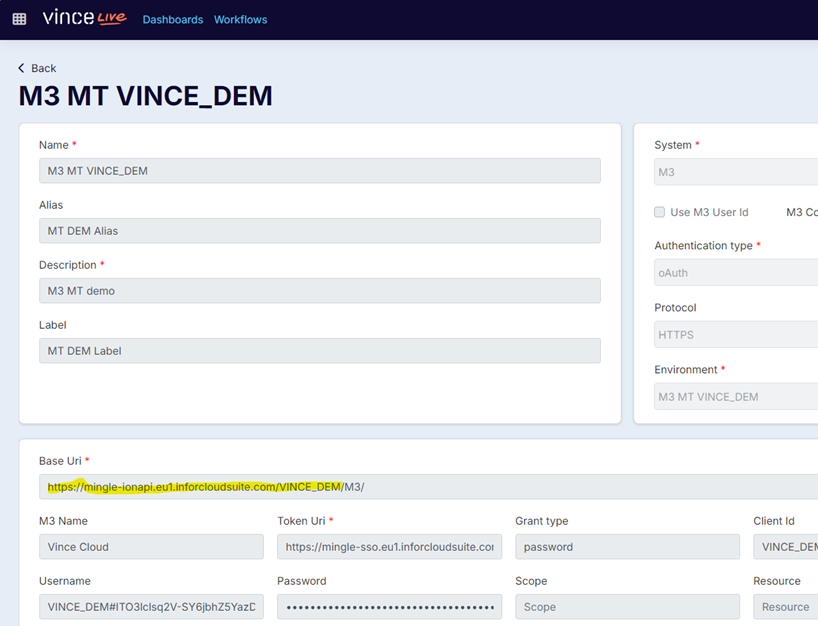

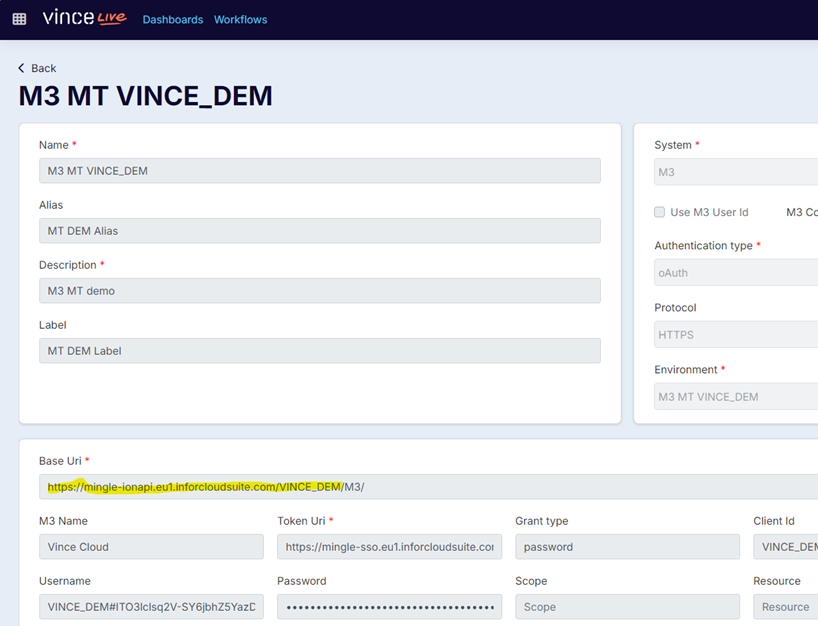

Endpoint is the datafabric/compass endpoint of your M3 environment.

The host and environment will match your vince connection “Base Uri”, for example:

Note that the “/M3/” is not part of the Data Lake endpoint. Replace the “/M3/” with the following:

“/DATAFABRIC/compass/v2/jobs”.

For Example:

"endpoint": "https://mingle-ionapi.eu1.inforcloudsuite.com/VINCE_DEM/DATAFABRIC/compass/v2/jobs",

Body

Body is the Data Lake sql statement you wish to execute. It can be a hard-coded statement, or you can also use parameters, as described in the video series.

Example of a hard-coded statement:

"body": "select top 10 ITNO, ITDS, INDI from MITMAS"

Example of a parameterized statement that contains both hard-coded elements and parameters:

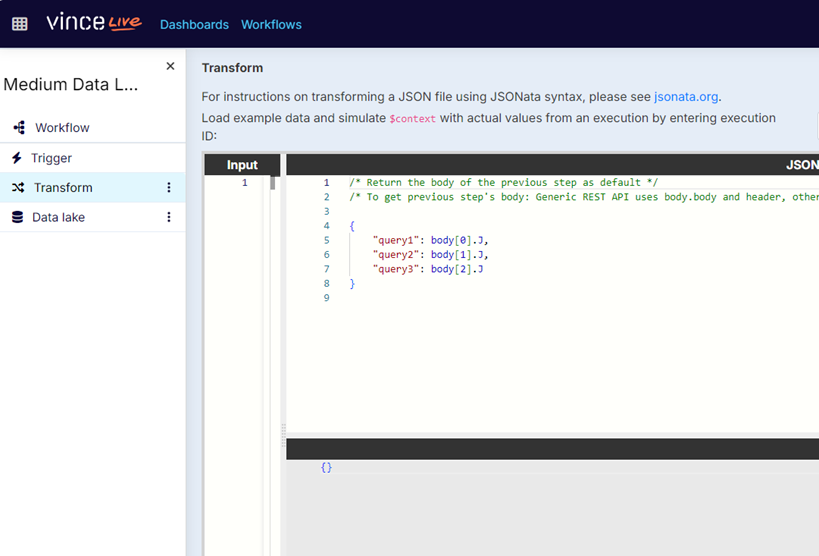

Note that if using parameters, you must define these parameters in a “transform” step that precedes the Data Lake step.

In this example, we define “query1”, “query2”, and “query3” as cells J1, J2, and J3 of the input spreadsheet:

For your convenience, here is the jsonata code:

"query1": body[0].J,

"query2": body[1].J,

"query3": body[2].J

}

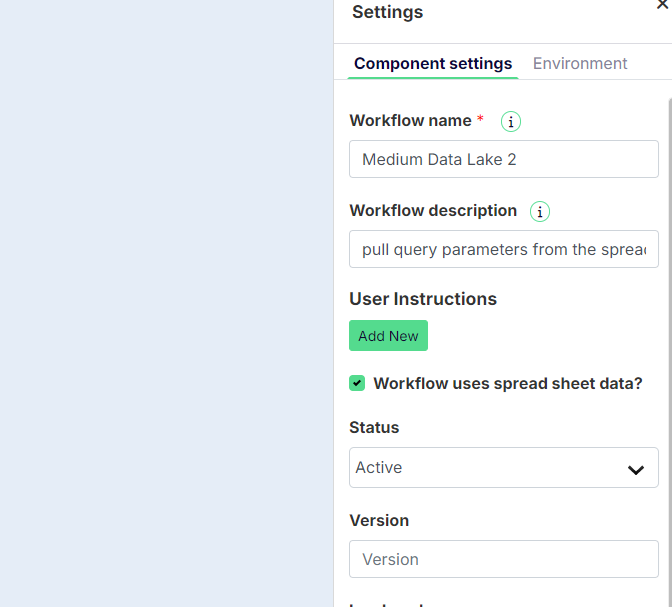

IMPORTANT: Note that if you use parameters, you must select the “Workflow uses spreadsheet data?” checkbox at the workflow level, as shown here.

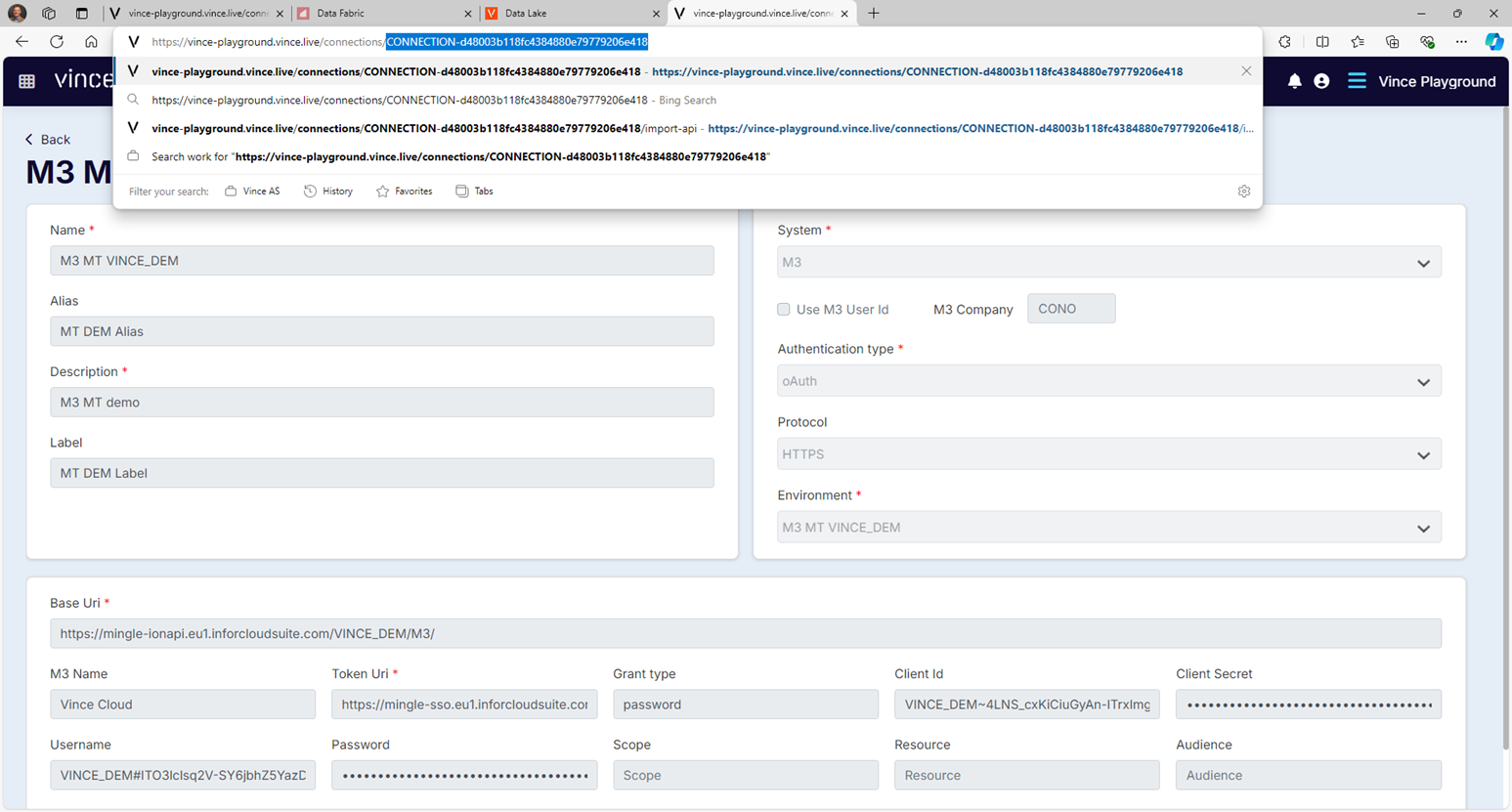

ConnectionID

A reference to the authentication credentials needed to call the data lake endpoint. This will be found in the same connection management page as the Base Uri:

Copy and paste the GUID-like connection reference from the page containing the connection, and paste into the data lake connectionId item.

For example:

"connectionId": "CONNECTION-d48003b118fc4384880e79779206e418",

saveResultToS3

Always set to “false”

"saveResultToS3": false

Tenants do not have access to the S3 data storage locations so please set this to false.